Imagine controlling your device by just moving your head and without touching any buttons.

Recognizing head gestures such as nodding, shaking, and other general head movements can be relevant in applications like safety helmets, VR headsets, and other forms of gear worn on the head.

Edge AI is helpful in these kinds of applications, for saving power while recognizing complex gestures at sensor level.

This use case will show you an example of head gesture recognition using the ST MEMS sensors in the SensorTile.box PRO board.

Application principle

The classification is based on accelerometer and gyroscope data coming from the LSM6DSV16X IMU (inertial measurement unit) available in the SensorTile.box PRO board.

Approach

Three classes are recognized by the Machine Learning Core (MLC) configuration of the LSM6DSV16X: nod, shake, other.

Accelerometer and gyroscope data rates is set at 30 Hz, the full scales at 4 g and 125 dps respectively.

The three classes are recognized by the decision tree running in the LSM6DSV16X MLC.

Sensor

LSM6DSV16X is a 6-axis inertial measurement unit (IMU) with embedded AI and sensor fusion, Qvar for high-end applications.

The LSM6DSV16X sensor is included in the SensorTile.box PRO board, a ready-to-use programmable wireless box kit for developing any IoT application including motion and environmental data sensing, along with a digital microphone.

Dataset and model

Dataset Three classes: nod, shake, other.

Accelerometer and gyroscope data rates is set at 30 Hz, the full scales at 4 g and 125 dps respectively.

Model The model has been trained with a dataset of around 360 seconds, equally balanced in the three classes.

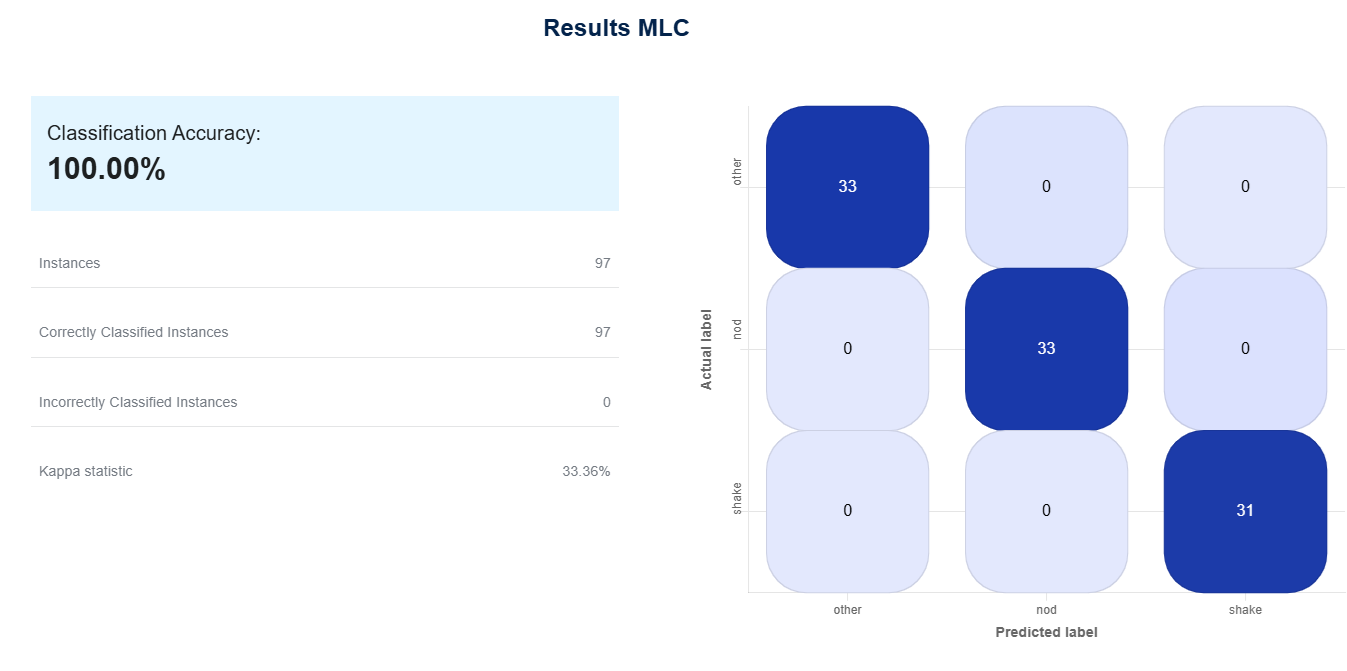

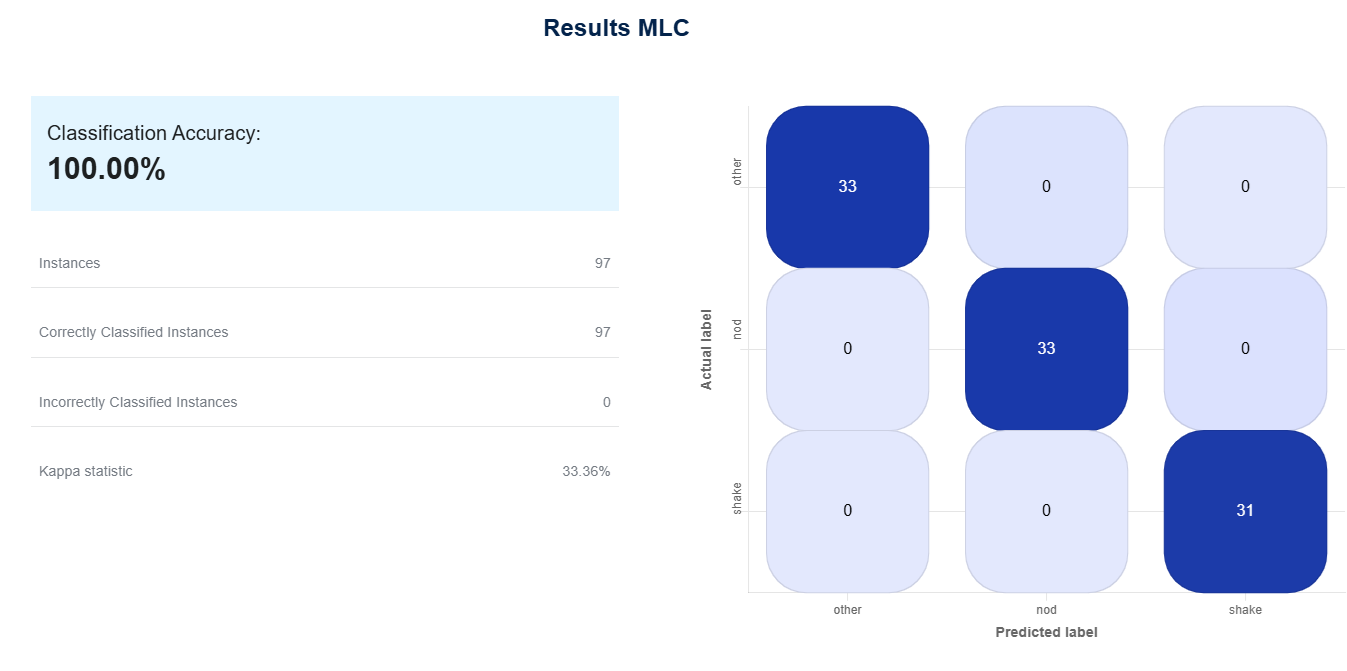

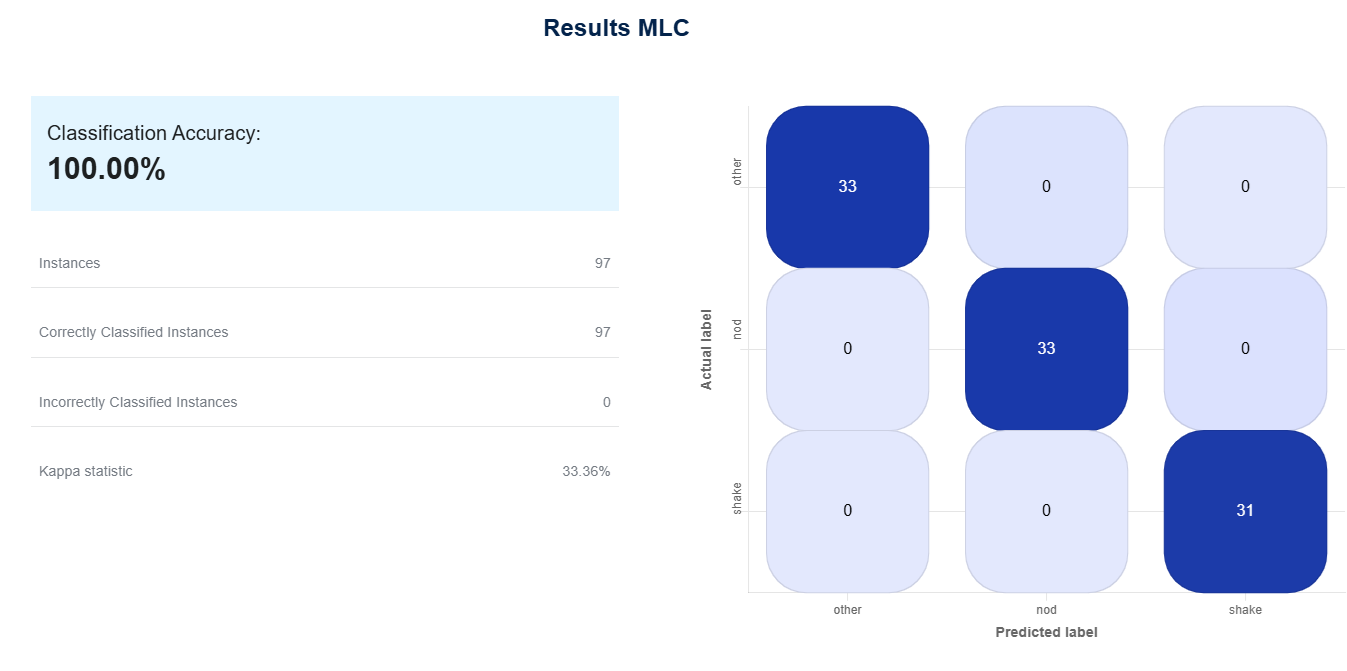

Results

The resulting decision tree model shows a 100% accuracy.

Easily recreate this use-case with the following resources:

- Open the use case with ST AIoT Craft

- Since recognition can be position-dependent, you can also clone this project to retrain it and fit your own use case, for example if you need to classify events that might involve the headset itself.

- To use this example, ensure that the board is placed firmly above the user's right ear vertically, with the USB port facing down toward the ear, and the LEDs and logos facing outwards.

Additional resources:

Author: Michele FERRAINA | Last update: February, 2025

Resources

Optimized with ST AIoT Craft

ST AIoT Craft enables the development of IoT solutions using in-sensor AI and ST components. It allows profiling decision tree algorithms within the machine learning core of ST MEMS sensors and deploying sensor-to-cloud solutions.

Most suitable for LSM6DSV16X

The LSM6DSV16X is a high-performance, low-power 6-axis small IMU, featuring a 3-axis digital accelerometer and a 3-axis digital gyroscope. It also features an MLC (Machine Learning Core) to embed machine learning algorithms inside the sensor.