Product overview

Description

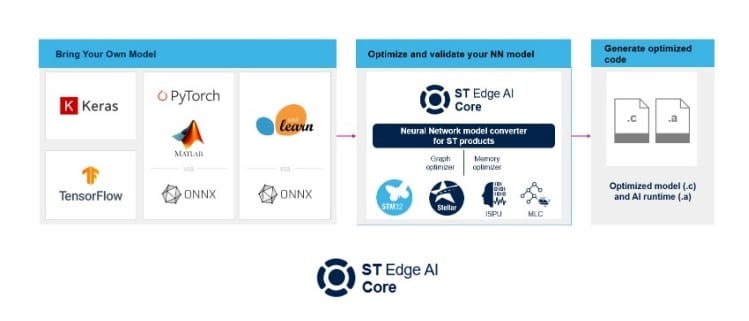

STEdgeAI-Core is a free-of-charge desktop tool to evaluate, optimize and compile edge AI models for multiple ST products, including microcontrollers, microprocessors, Neural-ART accelerator, and smart sensors with ISPU and MLC.

It is available as a command-line interface (CLI) and it allows an automatic conversion of pretrained artificial intelligence algorithms. Including neural network and classical machine learning models, into the equivalent optimized C code to be embedded in the application.

The generated optimized library offers an easy-to-use and developer-friendly way to deploy AI on edge devices.

When optimizing NN models for Neural-ART accelerator NPU, the tool generates the microcode that will map AI operations on the NPU when possible and fallback to CPU when not. This scheduling is done at the operators level to maximize AI hardware acceleration.

The tool offers several means to benchmark and validate artificial intelligence algorithms both on a personal workstation (Windows, Linux, Mac) or directly on the target ST platform.

The user manual is integrated into the tool itself in a convenient HTML format.

The STEdgeAI-Core technology is part of the ST Edge AI Suite, which is an integrated collection of software tools, designed to facilitate the development and deployment of embedded AI applications.

This comprehensive suite supports both optimization and deployment of machine learning algorithms.

The suite aids in managing neural network models, easing the process from data gathering to hardware deployment for users in different fields.

The ST Edge AI Suite supports various ST products: STM32 microcontrollers and microprocessors, Stellar microcontrollers, and smart sensors.

The ST Edge AI Suite is a strategic tool democratizing edge AI for developers, enabling efficient, effective AI deployment in embedded systems.

-

All features

- Generation of an optimized library from pre-trained neural network and classical machine learning models for supported ST products

- Support of STM32 microcontrollers and microprocessors, Stellar microcontrollers, and smart sensors with ISPU and MLC

- Support of ST Neural-ART accelerator™ neural processing unit (NPU) for AI/ML model acceleration in hardware

- Support for various deep learning frameworks such as Keras and TensorFlow™ lite

- Support for all frameworks that can export to the ONNX standard format such as PyTorch™, MATLAB®

- Support for various built-in scikit-learn models such as isolation forest, support vector machine (SVM), K-means via ONNX

- Provide detailed information about AI model RAM and flash memory sizes

- Provide detailed information about AI model operations executed on NPU or CPU when available

- Several optimization options are available (time, size or balanced)

- Validate optimized model against reference model on host and on target

- Support for 32-bits float and 8-bit quantized neural network formats (TensorFlow™ lite and ONNX tensor-oriented QDQ)

- Support for deeply quantized neural networks (down to 1-bit) from QKeras and Larq

- Desktop tool available for Windows, MacOS, Linux

- Free-of-charge, user-friendly license terms

Get Software

| Part Number | General Description | Latest version | Supplier | ECCN (EU) | ECCN (US) | Download | |

|---|---|---|---|---|---|---|---|

| STEdgeAI-NPU | Neural-ART Accelerator (NPU) component for ST Edge AI Core | 10.0.0 | ST | NEC | 5A992.c | Get latest | |

| STEAICore-MacArm | ST Edge Ai Core SW Package for Mac ARM | 2.0.0 | ST | NEC | 3D991 | Get latest | |

| STEAICore-Win | ST Edge Ai Core SW Package for Windows | 2.0.0 | ST | NEC | 3D991 | Get latest | |

| STEAICore-Linux | ST Edge Ai Core SW Package for Linux | 2.0.0 | ST | NEC | 3D991 | Get latest | |

| STEAICore-Mac | ST Edge Ai Core SW Package for Mac | 2.0.0 | ST | NEC | 3D991 | Get latest |