製品概要

概要

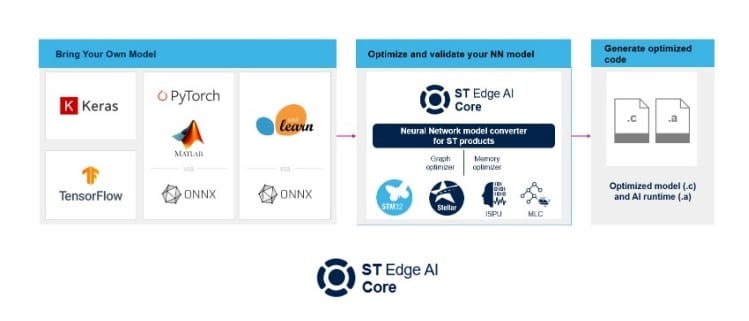

STEdgeAI-Coreは、複数のST製品(マイクロコントローラ、マイクロプロセッサ、Neural-ARTアクセラレータ、ISPUおよびMLC搭載のスマート・センサなど)向けの組み込みAIモデルを評価、最適化、コンパイルすることができる無料のデスクトップ・ツールです。

コマンド・ライン・インタフェース(CLI)として利用可能で、学習済みAIアルゴリズムを自動変換することができます。たとえば、ニューラル・ネットワークや従来型の機械学習モデルを、同等の最適化されたCコードに変換してアプリケーションに組み込むことができます。

生成された最適化済みライブラリを使用すれば、開発者はエッジ・デバイスにAIを容易に展開できるようになります。

Neural-ARTアクセラレータNPU向けにNNモデルを最適化すると、ハードウェア処理可能な場合にはNPU上でAIの動作をマッピングし、ハードウェア処理できない場合にはCPUにフォールバックするマイクロコードを生成できます。このスケジューリングはオペレータ(演算子)のレベルで実行され、AIハードウェア・アクセラレーションを最大化します。

このツールでは、AIアルゴリズムをベンチマークし、検証するための手段を、パーソナル・ワークステーション(Windows、Linux、Mac)と、対象STプラットフォーム上の両方向けに、複数用意しています。

ユーザ・マニュアルは、便利なHTMLフォーマットでツール自体に統合されています。

STEdgeAI-Coreテクノロジーは、ソフトウェア・ツールを統合したST Edge AI Suiteの一部で、組み込みAIアプリケーションの開発と展開を支援するように設計されています。

この包括的なスイートは、機械学習アルゴリズムの最適化と展開の両方に対応しています。

さまざまな現場のユーザのために、データ収集からハードウェアの展開までのプロセスを支援することで、ニューラル・ネットワーク・モデル管理に役立っています。

ST Edge AI Suiteは、STM32マイクロコントローラおよびマイクロプロセッサ、Stellarマイクロコントローラ、スマート・センサなど、STの各種製品をサポートしています。

ST Edge AI Suiteは、開発者向けに組み込みAIを民主化する戦略ツールで、組み込みのシステムにおける効率的かつ効果的なAI展開を可能にします。

-

特徴

- サポートしているST製品向けに、学習済みのニューラル・ネットワークと従来型の機械学習モデルから、最適化されたライブラリを生成

- STM32マイクロコントローラおよびマイクロプロセッサ、Stellarマイクロコントローラ、そしてISPUおよびMLCを搭載したスマート・センサをサポート

- ST Neural-ARTアクセラレータ™のニューラル・プロセッシング・ユニット(NPU)をサポートし、ハードウェアのAI/MLモデル・アクセラレーションを実現

- KerasやTensorFlow™ Liteなどの各種深層学習フレームワークをサポート

- PyTorch™、MATLAB®などONNX標準フォーマットにエクスポートできるあらゆるフレームワークをサポート

- isolation forest、サポート・ベクター・マシン(SVM)、K-meansなどのさまざまなscikit-learn組込み学習モデルをONNX経由でサポート

- AIモデルのRAMおよびFlashメモリのサイズに関する詳細情報を提供

- NPUまたはCPUで実行されるAIモデルの動作に関する詳細情報を提供(可能な場合)

- 複数の最適化オプションを利用可能(時間、サイズ、バランス)

- ホストとターゲットで最適化されたモデルを基準モデルと比べて検証

- 32bit浮動小数点および8bit量子化を使用するニューラル・ネットワーク・フォーマットをサポート(TensorFlow™ LiteおよびONNXテンソル指向QDQ)

- QKerasおよびLarqの深層量子化ニューラル・ネットワーク(最小1bit)をサポート

- Windows、MacOS、Linuxで利用可能なデスクトップ・ツール

- 無料のユーザ・フレンドリなライセンス条項